Machine Learning

Machine learning in iOS:

Machine learning in iOS refers to the integration of artificial intelligence techniques into applications running on Apple’s iOS devices, such as iPhones and iPads. Essentially, it’s about teaching apps to learn from data and improve over time without being explicitly programmed. It enables apps to become more personalized, predictive, and capable of understanding user behavior.

How it works?

- Apps gather data from various sources. This could be user interactions, sensor data from the device (like accelerometer or GPS), or any other relevant data.

- The collected data is used to train machine learning models. These models are algorithms that can recognise patterns and make predictions based on the input data. For example, a model might learn to recognise faces in photos or predict text when you start typing.

- Once trained, these models are integrated into iOS apps. When users interact with the app, the machine learning models process the data and provide intelligent responses or actions.

- As users continue to interact with the app, it collects more data. This data is then used to refine and improve the machine learning models, creating a feedback loop that makes the app smarter over time.

Machine Learning APIs

By leveraging tools and techniques, developers can create iOS applications with powerful speech recognition and natural language processing capabilities, enabling users to interact with their apps using voice commands and facilitating a more intuitive and accessible user experience. Developers can utilize various APIs and frameworks. Here are some popular options:

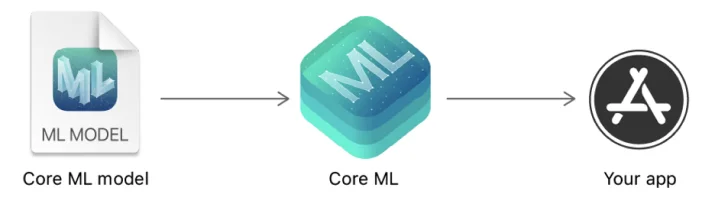

Core ML:

Core ML delivers fast performance on Apple devices with easy integration of machine learning models into your apps. Add prebuilt machine learning features into your apps using APIs powered by Core ML or use Create ML to train custom Core ML models right on your Mac.

How it works?

- Just like how you learn to ride a bike by practicing, Core ML learns from lots of examples. It looks at many pictures of cats to learn what cats look like or listens to many sounds to learn what a dog barking sounds like.

- Once Core ML learns, it can make guesses or predictions. For example, if you show it a picture, it can tell you if it’s a cat or a dog by remembering what it learned from all those examples.

- Core ML shares what it learned with apps on iPhones and iPads. So, if you have a drawing app, Core ML can help it recognise what you draw and give suggestions to make your drawings even better.

- The more you use apps with Core ML, the smarter they become because Core ML keeps learning from your interactions. It’s like having a friend who gets better at games the more they play.

Practical Applications of Core ML

Let’s explore some real-world examples of how Core ML can supercharge iOS apps:

- Image Recognition: Imagine a photo editing app that can automatically identify objects in your pictures and suggest relevant filters or editing tools. With Core ML, this becomes a reality, making photo editing smarter and more intuitive.

- Natural Language Processing: Chatbots and virtual assistants are everywhere these days. By leveraging Core ML, developers can create conversational interfaces that understand and respond to user queries with remarkable accuracy.

- Recommendation Systems: Whether it’s suggesting movies to watch, music to listen to, or products to buy, recommendation systems powered by Core ML can analyze user preferences and behavior to deliver personalized recommendations tailored to each individual user.

Getting Started with Core ML

Excited to harness the power of Core ML in your own iOS apps? Here’s how to get started:

- Learn the Basics: Familiarize yourself with machine learning concepts and explore Apple’s documentation on Core ML to understand how it works.

- Choose Your Model: Decide which machine learning model best suits your app’s needs. You can either train your own model using tools like TensorFlow or choose from a wide range of pre-trained models available online.

- Integrate with Xcode: Import your chosen model into your Xcode project using Core ML’s easy-to-use APIs. Don’t forget to test your app on different devices to ensure smooth performance across the board.

- Iterate and Improve: Machine learning is all about iteration and improvement. Continuously gather feedback from users and refine your models to make your app smarter and more intuitive over time.

Vision Framework:

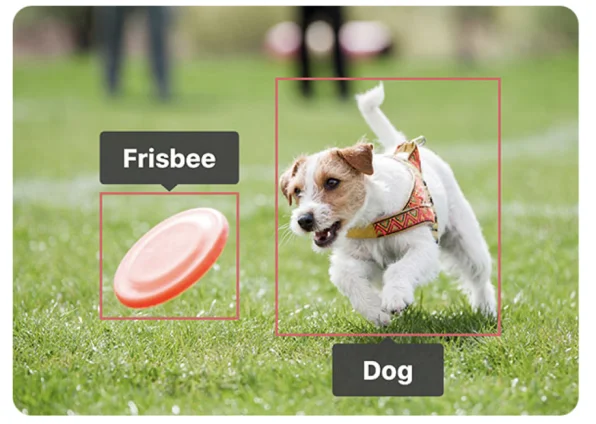

The Vision framework is like a toolbox that helps iPhone and iPad apps understand what they see in pictures. It can do things like figuring out what’s in a photo or recognizing objects. It’s really smart because it works with something called Core ML. This means developers can make their apps do cool stuff with pictures without needing to be experts in machine learning. So, it’s like having a smart helper that makes apps see and understand the world better!

Key Features and Capabilities

Let’s take a closer look at some of the key features and capabilities offered by the Vision framework:

-

Image Analysis: The Vision framework provides robust support for analyzing images, including detecting faces, identifying landmarks, and recognizing barcodes. This enables developers to build apps that can extract valuable information from photos and images with ease.

- Object Detection and Tracking:With the Vision framework, developers can implement advanced object detection and tracking algorithms, allowing apps to identify and track objects in real-time. This opens up exciting possibilities for augmented reality applications, games, and more.

-

Text Recognition:Another powerful feature of the Vision framework is its ability to recognize and extract text from images. Whether it’s scanning documents, reading signs, or extracting information from receipts, the Vision framework makes text recognition a breeze.

- Machine Learning Integration Under the hood, the Vision framework leverages machine learning models to power its algorithms. This enables developers to benefit from state-of-the-art computer vision techniques without needing expertise in machine learning.

Real-World Applications

The Vision framework offers a wide range of applications across various industries and use cases. Here are just a few examples:

- Accessibility: Apps can use text recognition to assist users with visual impairments by reading text aloud or providing spoken descriptions of images.

- Security: Security apps can leverage object detection to identify and alert users about potential security threats, such as unauthorized access or suspicious activity.

- Retail: Retail apps can use image analysis to enable features like visual search, allowing users to find products by taking photos or screenshots.

- Healthcare: Healthcare apps can utilize the Vision framework for tasks such as analyzing medical images, monitoring patient vital signs, and detecting anomalies.

Getting Started with the Vision Framework

Ready to harness the power of the Vision framework in your iOS apps? Here are some steps to get started:

- Accessibility: Apps can use text recognition to assist users with visual impairments by reading text aloud or providing spoken descriptions of images.

- Security: Security apps can leverage object detection to identify and alert users about potential security threats, such as unauthorized access or suspicious activity.

- Retail: Retail apps can use image analysis to enable features like visual search, allowing users to find products by taking photos or screenshots.

- Healthcare: Healthcare apps can utilize the Vision framework for tasks such as analyzing medical images, monitoring patient vital signs, and detecting anomalies.

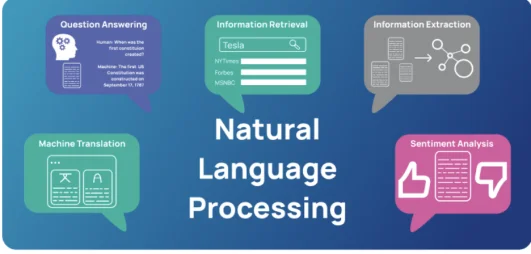

Natural Language Framework:

The Natural Language framework is like a toolkit for iPhone and iPad apps to understand human language better. It helps with tasks like breaking down sentences into words (tokenisation), figuring out which language is being used (language identification), and recognizing important names or terms (named entity recognition).

This framework also works with Core ML, which is like a smart assistant. This collaboration allows developers to make their apps even better at understanding language using machine learning techniques.

In simple words, it’s like giving apps a language-savvy friend to help them understand what people are saying or writing, making them better at communicating with users.

Practical Applications of the Natural Language Framework

- Chatbots and Virtual Assistants: By integrating the Natural Language framework, developers can create conversational interfaces that understand and respond to user queries with remarkable accuracy, enhancing the overall user experience.

- Content Analysis and Categorization: Content-based apps can use the framework to analyze and categorize text data, making it easier for users to discover relevant content based on their interests and preferences.

- Customer Support and Feedback Analysis: Businesses can leverage the Natural Language framework to analyze customer support interactions and feedback, gaining valuable insights into customer sentiment and concerns.

Speech Framework:

It helps apps listen to what users say and convert it into text (speech-to-text). This can be useful for voice commands, dictation, or transcribing spoken conversations.

The framework also allows apps to generate speech from text (text-to-speech) to talk back to users with a voice. This feature is handy for apps like virtual assistants or navigation guides.

Overall, the Speech framework makes it easier for apps to interact with users through spoken language, making them more accessible and user-friendly.

It encompasses three main functionalities:

- Speech Recognition: This feature allows apps to transcribe spoken words into text, enabling users to input commands, dictate messages, or perform searches using their voice.

- Speech Synthesis: With speech synthesis, developers can convert text into spoken words, allowing apps to provide auditory feedback, read-aloud content, or create interactive voice-guided experiences.

- Natural Language Understanding: By leveraging advanced natural language processing capabilities, apps can interpret and analyze spoken input, enabling more sophisticated interactions and personalized experiences.

Practical Applications

The versatility of the Speech Framework opens up a wide range of possibilities for developers across various industries. Here are some practical applications:

- Voice Dictation: Text input via voice is becoming increasingly popular, particularly in messaging apps, note-taking apps, and productivity tools.

- Virtual Assistants: Apps can leverage speech recognition and natural language understanding to create virtual assistants capable of understanding and responding to user queries, providing personalized recommendations, and performing tasks on behalf of the user.

- Accessibility Features: Voice control and screen reader integration empower users with disabilities to interact with apps more effectively, improving accessibility and inclusivity.

- Language Learning: Language learning apps can utilize speech synthesis to provide pronunciation feedback and interactive conversational practice, enhancing the learning experience for users.

Conclusion

In essence, machine learning is revolutionizing the way we interact with iOS apps, making them smarter, more intuitive, and more capable than ever before. By embracing these technologies, developers can create innovative and compelling experiences that delight users and set their apps apart in a crowded marketplace. With the continuous advancements in machine learning and iOS development, the future holds endless possibilities for creating even more intelligent and immersive iOS apps.

Interested in Learning More About x-enabler Book a One-to-One Personalized Call

Leave a comment!